The invention of the MAGNITUDE SYSTEM is often attributed to

HIPPARCHOS

(2nd century BC), but this is only a conjecture. The system

probably did precede

PTOLEMY (circa 100--175)

(To-16).

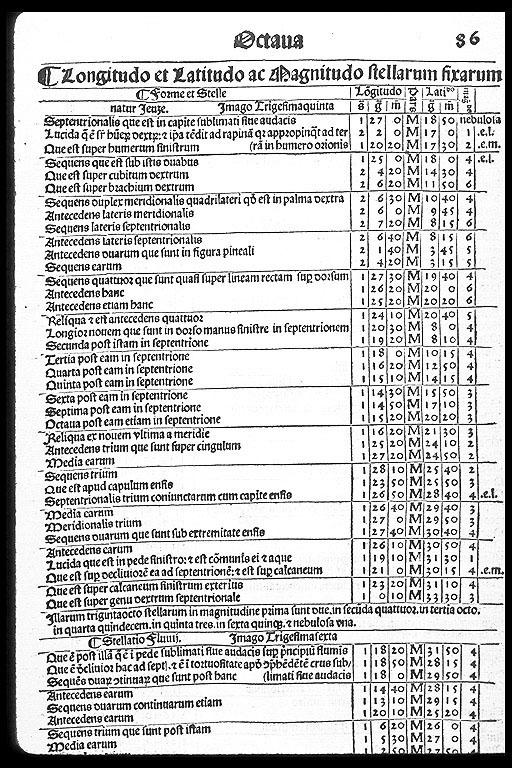

In any case, Ptolemy effectively established the traditional semi-qualitative

system in the star catalogue of his

ALMAGEST.

He presented the longitudes, latitudes, and magnitudes of 1022 fixed

stars divided into 48 constellations and a handful of nebulae

(No-113;

To-14).

The invention of the MAGNITUDE SYSTEM is often attributed to

HIPPARCHOS

(2nd century BC), but this is only a conjecture. The system

probably did precede

PTOLEMY (circa 100--175)

(To-16).

In any case, Ptolemy effectively established the traditional semi-qualitative

system in the star catalogue of his

ALMAGEST.

He presented the longitudes, latitudes, and magnitudes of 1022 fixed

stars divided into 48 constellations and a handful of nebulae

(No-113;

To-14).

The magnitude system was a description of

STAR BRIGHTNESS IN 6 CLASSES.

1st magnitude described the brightest stars; 2nd magnitude the 2nd brightest,

and so on down to 6th magnitude which described the faintest that could

normally be seen by the

naked eye.

Ptolemy's catalog was certainly based on earlier work, but he probably

made some additions himself.

The magnitude system is only semi-quantitative since measurements were

by the naked eye and assignment of magnitude is based on comparison of stars.

When the TELESCOPE was invented in 1608

(No-328,

Lecture 4.8),

vastly many stars fainter than 6th magnitude were observed and the

magnitude was eventually extended higher values (lower brightnesses).

The magnitude system was a description of

STAR BRIGHTNESS IN 6 CLASSES.

1st magnitude described the brightest stars; 2nd magnitude the 2nd brightest,

and so on down to 6th magnitude which described the faintest that could

normally be seen by the

naked eye.

Ptolemy's catalog was certainly based on earlier work, but he probably

made some additions himself.

The magnitude system is only semi-quantitative since measurements were

by the naked eye and assignment of magnitude is based on comparison of stars.

When the TELESCOPE was invented in 1608

(No-328,

Lecture 4.8),

vastly many stars fainter than 6th magnitude were observed and the

magnitude was eventually extended higher values (lower brightnesses).

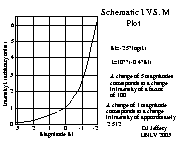

In the 19TH CENTURY it became possible to measure the INTENSITY OF LIGHT (i.e., energy per unit time per unit area) in different wavelength bands. (In fact in the 19th century the modern concept of ENERGY was first invented and so this ability couldn't have come any earlier.) It was then discovered that the TRADITIONAL MAGNITUDE SCALE corresponded to an approximately LOGARITHMIC measure of intensity. The psycho-physical response of the eye to intensity is, in fact, approximately logarithmic at least under some conditions: i.e.,

His formula for the magnitude M of an object of intensity I is

His formula for the magnitude M of an object of intensity I is